In the modern business landscape, organizations are continually seeking efficient ways to process, integrate, engineer, and analyze vast amounts of data. One of the most powerful tools for achieving this is through Microsoft Fabric, particularly through its workload Data Factory.

Microsoft Fabric represents a groundbreaking advancement in data and AI services by unifying these capabilities into a single, cohesive platform. This integration democratizes data analysis and empowers businesses to harness the power of AI more effectively. With Data Factory as a core component, Microsoft Fabric aims to streamline complex data integration needs, enabling businesses to embrace new digital transformation opportunities and drive innovation.

This blog will explore the concept of a data factory in Microsoft Fabric, which offers businesses a robust data integration experience with high-level functionalities.

What is Data Factory?

Data Factory is a powerful integration service within the Microsoft Fabric platform that automates and orchestrates data workflows and handles the entire ETL (extract, transform, load) process.

Microsoft Fabric Data Factory: A robust data integration tool within the Fabric environment

Data factory in Fabric provides a modern data integration experience for data professionals to ingest, prepare, and transform data from disparate data sources, such as Lakehouse, data warehouse, and database, etc. With a modern and trusted data integration experience, data professionals can extract, load, and transform data for their organization. Moreover, the powerful data orchestration capabilities empower data and business users to build simple or complex workflows. These workflows can be tailored to perfectly match their specific data integration needs.

Data Factory in Microsoft Fabric features Fast Copy, a game-changer for both dataflows and data pipelines. With this innovative capability of Fast Copy, you can easily transfer data to your preferred storage solutions at lightning-fast speed. Most importantly, Fast Copy empowers you to seamlessly populate your Microsoft Fabric Lakehouse and Data Warehouse for data analysis, further enhancing the efficiency of your data operations.

High-level features of Data Factory in Microsoft Fabric

The high-level features of Fabric Data Factory, i.e., Dataflows and Data pipelines, provide a comprehensive solution to streamline data integration workflows with precision and efficiency. Let’s explore these features in detail and identify how they revolutionize data management and process for modern businesses.

Dataflows

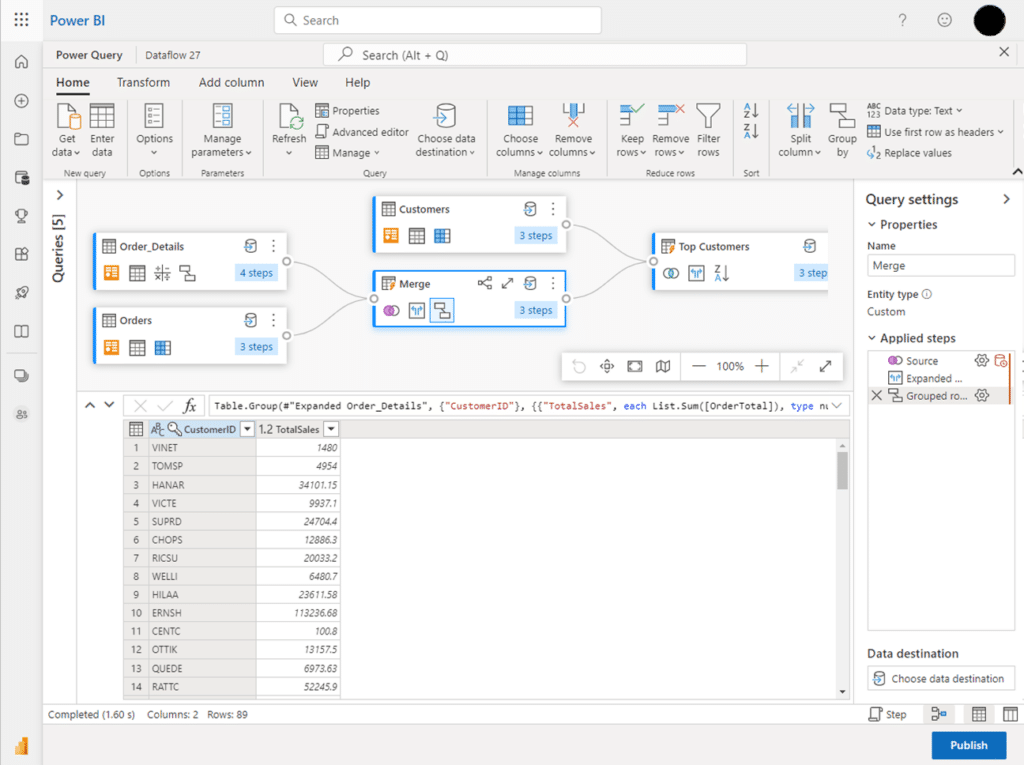

Data Factory Dataflows in Microsoft Fabric provides a low-code data ingestion and transformation interface. This user-friendly environment empowers users to access data from hundreds of diverse sources. Over 300 built-in data transformation functions enable users to manipulate the data to their specific requirements. The resulting data can be loaded into various destinations, including Lakehouse, and Azure SQL databases following transformation. Dataflows offer flexible execution options, including manual or scheduled refresh and integration into broader data pipeline orchestrations.

The dataflows within Microsoft Data Factory offer a visual, Power Query Experience that integrates with different Microsoft products and services, such as Excel, Power BI, Microsoft Dynamics 365 Insights applications, and more. This empowers data professionals of all skill levels and allows seamless data ingestion and transformation. The user-friendly, low-code interface empowers users to perform essential tasks like joins, aggregations, data cleansing, and custom transformations – all within a highly visual environment.

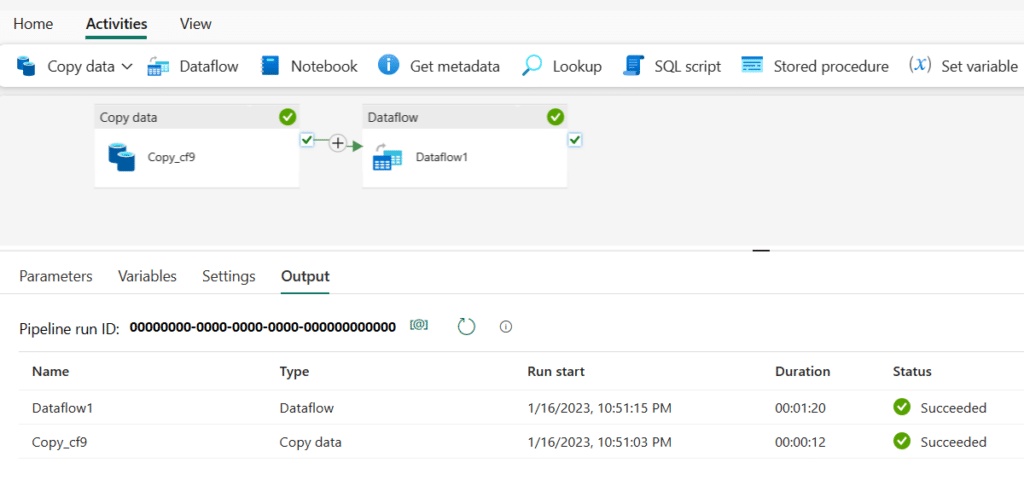

Data pipelines

Data Factory pipelines offer powerful, cloud-scalable workflow capabilities. This empowers you to create complex workflows to refresh dataflows and efficiently transfer massive datasets. Additionally, you can define sophisticated control flow pipelines that meticulously manage your data processing tasks.

Data Factory pipelines empower you to build robust ETL (Extract, Transform, Load) and broader data factory workflows. These workflows can handle a multitude of tasks at scale by using the built-in control flow capabilities of data pipelines.

Data Factory seamlessly combines low-code dataflow refreshes with configuration-driven copy activities within a single pipeline to easily build end-to-end ETL workflows. For advanced scenarios, the platform allows users to add code-first activities for Spark Notebooks, SQL scripts, stored procedures, and more to ensure maximum flexibility for your data processing needs.