Data & AI Evangelist

Principal Data Architect

Subscribe to the newsletter

The rise of big data and the advancement in data science have generated large volumes of data, emphasizing the importance of storage and retrieval in data engineering. As the amount of data created daily is expected to reach a staggering 463 exabytes by 2025, data engineers and analysts have a unique chance to make a real impact. From designing and building to maintaining infrastructures and systems, organizations leverage data engineers to collect, store, process, and analyze data. Microsoft Fabric, a comprehensive analytics solution provides data engineers with a robust platform and a suite of powerful tools to tackle this deluge of data.

Within the Fabric ecosystem, data engineers can leverage robust data processing frameworks, such as Azure Data Factory and Azure Databricks, to design and deploy data pipelines that easily handle massive volumes of data.

In this blog, we will explore data engineering in Microsoft Fabric while highlighting the key components that users can access through the data engineering homepage.

A brief revisit to Microsoft Fabric

Microsoft Fabric is an end-to-end, unified analytics platform, empowering organizations with seamless data integration, comprehensive analytics capabilities, and scalable solutions. It is an AI-powered platform that allows users to derive actionable insights from their data assets. Microsoft Fabric aims to allow businesses and data professionals to make the most out of their data in the age of data and AI.

Microsoft Fabric has a shared SaaS foundation. This foundation brings together various experiences such as Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Analytics, and Power BI. This integration allows:

- Access to an extensive range of deeply integrated analytics in the industry.

- Shared experiences that are familiar and easy to learn.

- Developers can easily access and reuse all assets.

- A unified data lake that allows users to retain the data using your desired analytics tools.

- Centralized administration and governance across all experiences.

Read a detailed introduction to Microsoft Fabric here.

Data engineering in Microsoft Fabric

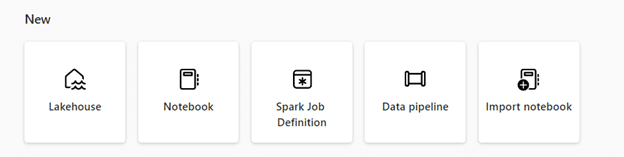

Data engineering in Microsoft Fabric enables you to create and manage data pipelines, forming the backbone of modern data-driven organizations. Microsoft Fabric offers a range of data engineering capabilities to ensure accessibility, organization, and quality of data. Through the data engineering homepage, users can:

- Acquire and manage your data through Lakehouse.

- Design data pipelines to efficiently transfer data into your Lakehouse, no matter where it comes from.

- Use Spark job definitions to submit batch/streaming jobs to the Spark cluster.

- Use notebooks to write codes for various data operations, such as data ingestion, preparation, and transformation.

Microsoft Fabric for data engineering has the potential to revolutionize how data engineers and professionals perform their tasks. It provides an intelligent layer to enhance skills and streamline workflows. With integrated features like Lakehouse, notebooks, Spark Jobs, and data pipelines, it offers a comprehensive solution for modern data management and processing.

Let’s explore these one by one:

Read eBook on Modernize your data stack: Leveraging Microsoft Fabric for success.

Lakehouse

Data Lakehouse architectures in Microsoft Fabric allow organizations to store and manage data (structured and unstructured) within a single repository. Lakehouse in Fabric is a flexible and scalable solution framework. It integrates with data management and analytics tools to provide a comprehensive solution for data engineering and analytics.

With a multitude of tools and frameworks, businesses can effectively process and analyze this data, including SQL-based queries and analytics, along with machine learning and other advanced analytics techniques. A data engineer can interact with the data within the Lakehouse through the Lakehouse explorer on the main Lakehouse interaction page. This allows end users to easily load data in your Lakehouse and explore data in the Lakehouse using the object explorer.

Apache Spark job definition

Apache Spark job definition consists of instructions that outline the execution of a job on a Spark cluster. These instructions include information on input and output data sources, transformation processes, and the configuration setting for the Spark application. Spark job definitions facilitate submitting batch and streaming jobs to the Spark cluster, implementing diverse transformation strategies on the data housed within their Lakehouse, alongside other functionalities.

To run a Spark job definition, users must have at least one Lakehouse. This default Lakehouse is the default file system for Spark runtime. For any Spark code using a relative path to read/write data, the data is served from the default Lakehouse.

Notebooks

Microsoft Fabric Spark Notebook serves as an interactive Spark computing environment. It allows users to generate and share documents featuring live codes, equations, visualizations, and narrative text. Notebooks facilitate the writing and execution of codes in multiple programming languages, such as Python, R, and Scala. Users can use notebooks to perform various data operations, such as data ingestion, preparation, analysis, and other data-centric tasks.

Within Microsoft Fabric notebooks, users can:

- Get started with zero set-up effort.

- Analyze data across raw formats (CSV, txt, JSON, etc.) and processed file formats (parquet, Delta Lake, etc.) using powerful Spark capabilities.

- Be productive with enhanced authoring capabilities and built-in data visualization.

- Easily explore and process data with intuitive low-code experience.

- Keep data secure with built-in enterprise security features.

Data pipelines

Data pipelines play a crucial role in data engineering. Microsoft Fabric data pipelines function as a series of interconnected steps. These steps are designed to collect, process, and transform data from its raw state into a suitable format for analysis and informed decision-making.

Pipelines are commonly used to automate extract, transform, and load (ETL) processes. These processes ingest transactional data from operational data stores into an analytical data store, such as a Lakehouse or data warehouse. Users can create and run pipelines interactively in the Microsoft Fabric user interface using Data Factory, Data Flows, and Spark Notebooks. Pipeline flows can be set to run automatically based on a condition, manually, or a scheduled basis.

Pipelines are an integral component of data engineering. It provide a reliable, scalable, and efficient solution for the movement of data from its source to its desired destination.

Pioneer AI-enhanced data engineering and analysis with Confiz

The data engineering capabilities in Microsoft Fabric enable AI-enhanced data analysis and automated creation of Notebooks, reports, and dashboards. Fabric serves as a vital platform and toolset. It helps data professionals achieve efficient data management, insightful analysis, and innovative machine-learning solutions.

To explore how Confiz can help you leverage Microsoft Fabric’s capabilities for your data engineering needs, reach out to us today at marketing@confiz.com.